Story

MEPhI_ROS2_drone Project: An Educational Platform for Robotics Based on ROS2

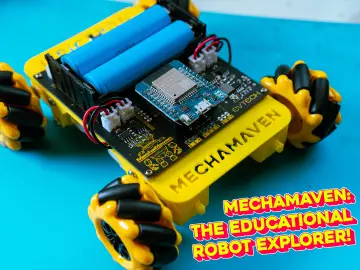

In an era of rapid advancements in autonomous systems and artificial intelligence, where robots are becoming an integral part of daily life, the MEPhI_ROS2_drone project stands out as a shining example of an innovative approach to robotics education. Developed by students of the National Research Nuclear University MEPhI (NRNU MEPhI), this open-source two-wheeled robot serves as a versatile platform for learning and mastering Robot Operating System 2 (ROS2). It has been meticulously adapted with modifications to its software, design, and electrical schematics to better align with educational goals and enable users to freely customize its structure. With openly available 3D models and source code, this project is an ideal tool for enthusiasts, students, and researchers eager to dive into the world of autonomous navigation and sensor integration.

Hardware Foundation: Simplicity and Reliability in Every Component

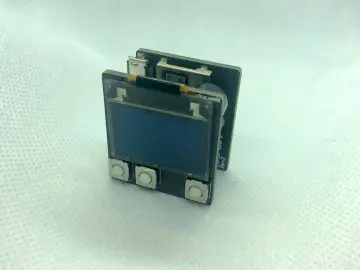

At the core of MEPhI_ROS2_drone lies a harmonious blend of accessible and robust hardware tailored for practical applications. The central component is the Raspberry Pi 4B, a compact yet powerful microcomputer capable of handling complex algorithms in real time. For motor control and low-level functions, the Arduino UNO R3 is employed, ensuring precise movement control. The sensor suite includes the RPLIDAR A1 LiDAR, enabling high-precision environmental mapping and localization.

The robot’s design is meticulously crafted: its chassis consists of 3D-printed parts (.stl files) and laser-cut components (.dxf files), making assembly accessible even for beginners. The electrical schematic, provided in the repository, details all necessary connections—from power supply to sensor interfaces. This is not just a robot but a modular platform: users can add new sensors or modify the chassis, transforming it into a personalized tool for experimentation. Video demonstrations of the project—from laser scanning visualization in RViz2 to autonomous navigation—vividly showcase its capabilities, highlighting the stability and efficiency of the hardware.

Software Framework: Seamless Integration with ROS2 for Cutting-Edge Development

MEPhI_ROS2_drone is fully integrated with ROS2 Humble, the latest version of the open-source Robot Operating System, offering modularity, scalability, and compatibility with a wide range of libraries. The project leverages a mix of programming languages: C++ (nearly 50% of the codebase) for high-performance modules, Python (approximately 27%) for scripts and interfaces, and CMake and Shell for build automation. Key components include:

- SLAM and Navigation: Implementation based on Cartographer for simultaneous localization and mapping (SLAM), alongside the Nav2 stack for autonomous navigation. The robot can build maps in manual mode and navigate autonomously, avoiding obstacles.

- Control and Odometry: Modules for motor control (diff_drive_controller) and joint state publishing (joint_state_broadcaster) from the ros2_control package ensure smooth movement and accurate position tracking.

- Sensor Integration: Support for LiDAR to scan the environment, with capabilities for telemetry and remote control.

Setup and configuration are designed for maximum simplicity. The Raspberry Pi runs Ubuntu 22.04 LTS, with USB ports configured for stable device recognition (using udev rules to assign fixed names like /dev/ttyUSB_ARDUINO). The Arduino firmware is loaded from a dedicated repository section, and ROS2 is installed via official repositories, followed by dependency initialization. The workspace is set up in the ~/MEPhI_ROS2_drone_ws directory, where the repository is cloned, packages are installed using rosdep, and the project is compiled with the colcon build command. This enables the robot to operate in simulation or on real hardware, with tools for mapping, navigation, and telemetry.

Key Features and Applications: From Learning to Innovation

The project stands out for its multifunctionality, making it more than just an educational tool—it’s a laboratory for creativity:

- Autonomous Navigation: The robot can independently navigate pre-built maps, utilizing obstacle avoidance algorithms.

- Telemetry and Visualization: Integration with RViz2 allows real-time visualization of LiDAR data and odometry.

- Educational Potential: Ideal for ROS2 courses, enabling students to experiment with SLAM, controllers, and sensors, fostering skills in robotics.

- Extensibility: The open-source code invites contributions, from adding new modules to integrating with AI models.

Demonstration videos showcase the project in action, from manual map creation to smooth autonomous movement, confirming its reliability and practicality.

Conclusion: A Contribution to the Future of Robotics

MEPhI_ROS2_drone is more than a technical project; it’s a bridge between theory and practice, inspiring a new generation of engineers. Its open-source nature encourages community collaboration, driving progress in autonomous systems. Developed within the walls of a leading university, it underscores the importance of education in innovation, offering an accessible tool for research in nuclear physics, AI, and robotics. This project is poised for scalability and could serve as a foundation for advanced systems, such as robot swarms or VR integration. By participating in project competitions, MEPhI_ROS2_drone showcases not only technical excellence but also a vision for a future where robotics is accessible to all.