Story

As I was reading about the applications of UV (ultraviolet) radiation in industrial operations, especially for anomaly detection, I became fascinated by the possibility of developing a proof-of-concept AI-driven industrial automation mechanism as a research project for detecting plastic surface anomalies. Due to the shorter wavelength of ultraviolet radiation, it can be employed in industrial machine vision systems to detect extremely small cracks, fissures, or gaps, as UV-exposure can reveal imperfections on which visible light bounces off, leading to catching some production line mistakes overlooked by the human eye or visible light-oriented camera sensors.

In the spirit of developing a proof-of-concept research project, I wanted to build an easily accessible, repeatable, and feature-rich AI-based mechanism to showcase as many different experiment parameters as I could. Nonetheless, I quickly realized that high-grade or even semi-professional UV-sensitive camera sensors were too expensive, complicated to implement, or somewhat restrictive for the features I envisioned. Even UV-only high-precision bandpass filters were too complex to utilize since they are specifically designed for a handful of high-end full-spectrum digital camera architectures. Therefore, I started to scrutinize the documentation of various commercially available camera sensors to find a suitable candidate to produce results for my plastic surface anomaly detection mechanism by the direct application of UV (ultraviolet radiation) to plastic object surfaces. After my research, I noticed that the Raspberry Pi camera module 3 was promising as a cost-effective option since it is based on the CMOS 12-megapixel Sony IMX708 image sensor, which provides more than 40% blue responsiveness for 400 nm. Although I knew the camera module 3 could not produce 100% accurate UV-induced photography without heavily modifying the Bayer layer and the integrated camera filters, I decided to purchase one and experiment to see whether I could generate accurate enough image samples by utilizing external camera filters, which exposes a sufficient discrepancy between plastic surfaces with different defect stages under UV lighting.

In this regard, I started to inspect various blocking camera filters to pinpoint the wavelength range I required — 100 - 400 nm — by absorbing visible light spectrums. After my research, I decided to utilize two different filter types separately to increase the breadth of UV-applied plastic surface image samples — a glass UV bandpass filter (ZWB ZB2) and color gel filters (with different light transmission levels - low, medium, high).

Since I did not want to constrain my experiments to only one quality control condition by UV-exposure, I decided to employ three different UV light sources providing different wavelengths of ultraviolet radiation — 275 nm, 365 nm, and 395 nm.

✅ DFRobot UVC Ultraviolet Germicidal Lamp Strip (275 nm)

✅ DARKBEAM UV Flashlight (395 nm)

✅ DARKBEAM UV Flashlight (365 nm)

After conceptualizing my initial prototype with the mentioned components, I needed to find an applicable and repeatable method to produce plastic objects with varying stages of surface defects (none, high, and extreme), composed of different plastic materials. After thinking about different production methods, I decided to design a simple cube on Fusion 360 and alter the slicer settings to engender artificial but controlled surface defects (top layer bonding issues). In this regard, I was able to produce plastic objects (3D-printed) with a great deal of variation thanks to commercially available filament types, including UV-sensitive and reflective ones, resulting in an extensive image dataset of UV-applied plastic surfaces.

✅ Matte White

✅ Matte Khaki

✅ Shiny (Silk) White

✅ UV-reactive White (Fluorescent Blue)

✅ UV-reactive White (Fluorescent Green)

Before proceeding with developing my industrial-grade proof-of-concept device, I needed to ensure that all components, camera filters, UV light sources, and plastic materials (filaments) I chose were compatible and sufficient to generate the UV-applied plastic surface image samples with enough discrepancy (contrast), in accordance with the surface defect stages, to train a visual anomaly detection model. Therefore, I decided to build a simple data collection rig based on Raspberry Pi 4 to construct my dataset and review its validity. As I decided to utilize the Raspberry Pi camera module 3 Wide to cover more of the surface area of the target plastic objects, I designed unique multi-part camera lenses according to its 120° ultra-wide angle of view (AOV) to make the camera module 3 compatible with the glass UV bandpass filter and the color gel filters. Then, I designed two different rig bases (stands) compatible with UV light sources in the flashlight form and the strip form, enabling height adjustment while attaching the camera module case mounts (carrying lenses) to change the distance between the camera (image sensor) focal point and the target plastic object surface.

After building my simple data collection rig, I was able to:

✅ utilize two different types of camera filters — a glass UV bandpass filter (ZWB ZB2) and color gel filters (with different light transmission levels),

✅ adjust the distance between the camera (image sensor) focal point and the plastic object surfaces,

✅ apply three different UV wavelengths — 395 nm, 365 nm, and 275 nm — to the plastic object surfaces,

✅ and capture image samples of various plastic materials showcasing three different stages of surface defects — none, high, and extreme — while recording the concurrent experiment parameters.

After collecting UV-applied plastic surface images with all possible combinations of the mentioned experiment parameters, I managed to construct my extensive dataset and achieve a reliable discrepancy between the different surface defect stages to train a visual anomaly detection model. In this regard, I confirmed that the camera module 3 Wide produced sufficient UV-exposed image samples to continue developing my proof-of-concept mechanism.

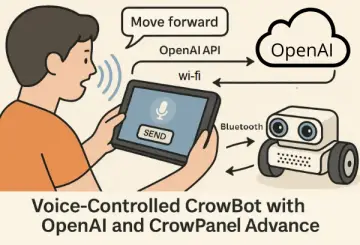

After training and building my FOMO-AD (visual anomaly detection model) on Edge Impulse Studio successfully, I decided not to continue developing my mechanism with the Raspberry Pi 4 and migrated my project to the Raspberry Pi 5 since I wanted to capitalize on the Pi 5’s dual-CSI ports, which allowed me to utilize two different types of camera modules (regular Wide and NoIR Wide) simultaneously. I decided to add the secondary camera module 3 NoIR Wide, which is based on the same IMX708 image sensor but has no IR filter, to review the visual anomaly model behaviour with a regular camera and a night-vision camera simultaneously to develop a feature-rich industrial-grade surface defect detection mechanism.

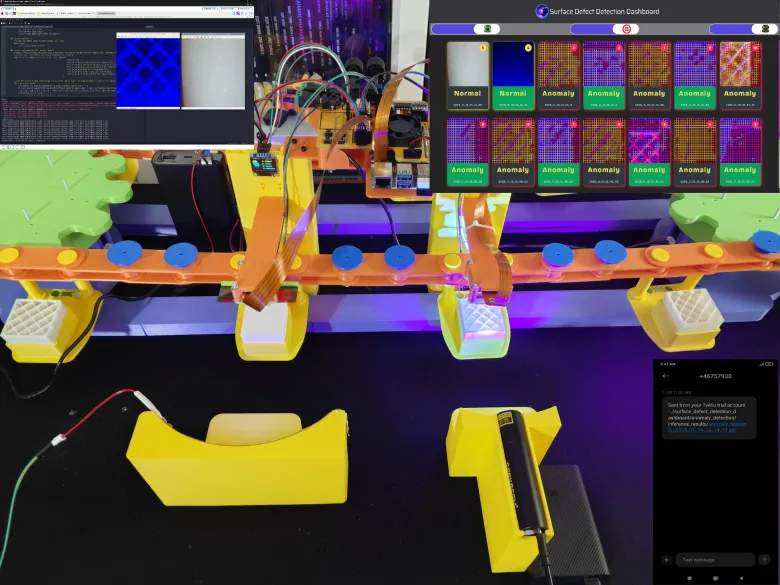

After configuring my dual camera set-up and visual anomaly detection model (FOMO-AD) on Raspberry Pi 5, I started to work on designing a complex circular conveyor mechanism based on my previous data collection rig, letting me place plastic objects under two cameras (regular Wide and NoIR Wide) automatically and run inferences with the images produced by them simultaneously.

Since I wanted to develop a sprocket-chain circular conveyor mechanism rather than a belt-driven one, I needed to design a lot of custom mechanical components to achieve my objectives and conduct fruitful experiments. Since I wanted to apply a different approach rather than limit switches to align plastic objects under the focal points of the cameras, I decided to utilize neodymium magnets and two magnetic Hall-effect sensor modules. While building these complex parts, I encountered various issues and needed to go through different iterations to complete my conveyor mechanism until I was able to demonstrate the features I planned. I documented my design mistakes and adjustments below to explain my development process thoroughly for this research study :)

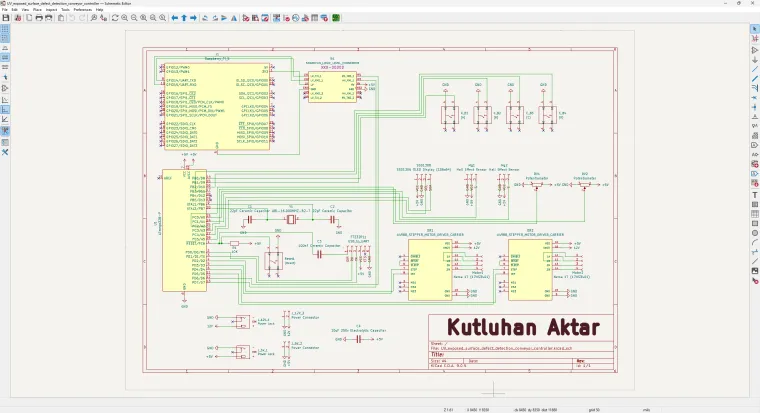

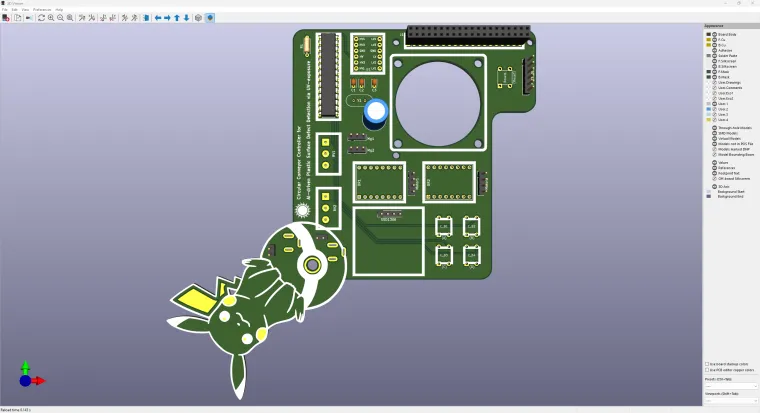

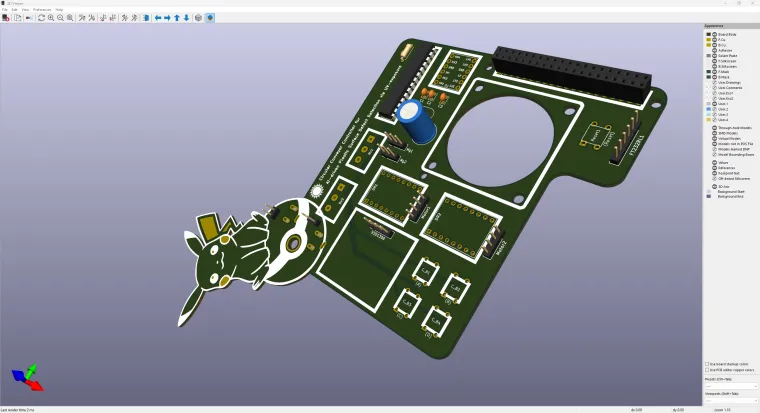

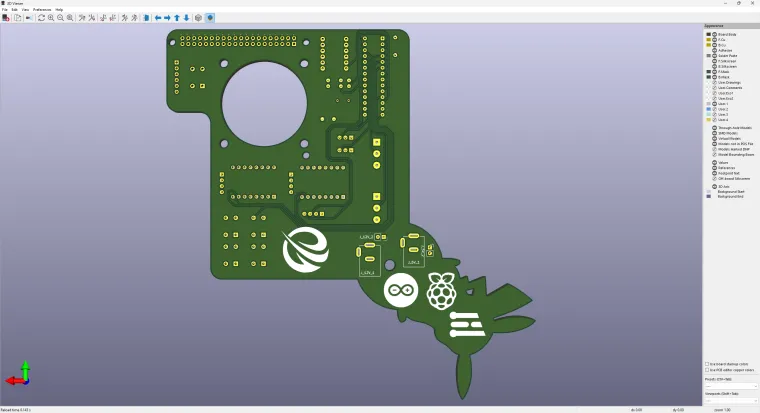

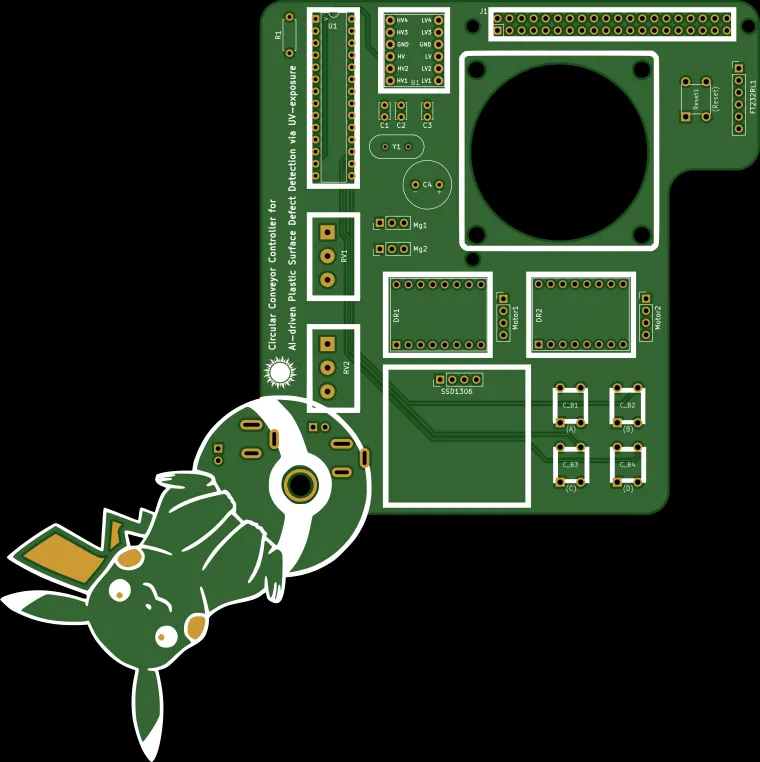

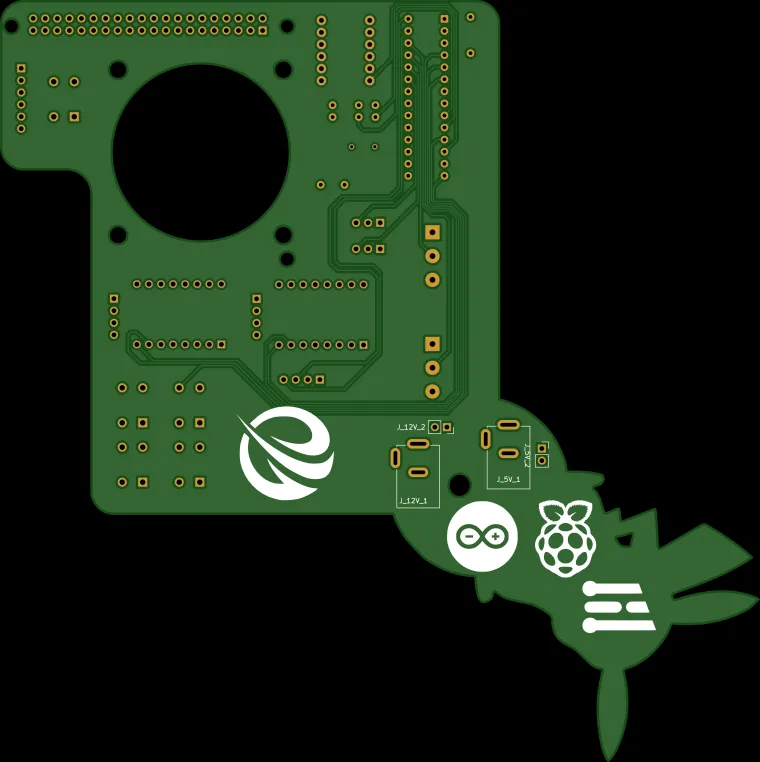

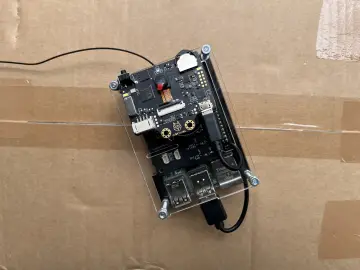

As I was starting to design the mechanical components, I decided to develop a unique controller board (PCB) as the primary interface of the sprocket-chain circular conveyor. To reduce the footprint of the controller board, I decided to utilize an ATmega328P and design the controller board (4-layer PCB) as a custom Raspberry Pi 5 shield (hat).

Finally, since I wanted to simulate the experience of operating an industrial-grade automation system, I developed an authentic web dashboard for the circular conveyor, which lets the user:

✅ review real-time inference results with timestamps,

✅ sort the inference results by camera type (regular or NoIR),

✅ and enable the Twilio integration to get the latest surface anomaly detection notifications as SMS.

By referring to the following tutorial, you can inspect the in-depth feature, design, and code explanations with the challenges I faced during the overall development process.

Development process, different prototype versions, design failures, and final results

As I was developing this research project, I encountered lots of problems due to complex mechanical component designs, especially related to the sprocket-chain mechanism, leading me to go through five different iterations. I documented the overall development process for the final mechanism in the following written tutorial thoroughly and showcased the features of the final version in the project demonstration videos.

Every feature of the final version of this proof-of-concept automation mechanism worked as planned and anticipated after my adjustments, except that the stepper motors (Nema 17) around which I designed the primary internal gears could not handle the extra torque applied to my custom-designed ball bearings (with 5 mm steel beads) after I recalibrated the chain tension with additional tension pins. I explained the reasons for the tension recalibration thoroughly in the following steps. In this regard, I needed to record some features related to sprocket movements (affixed to outer gears pivoted on the ball bearings) by removing or loosening the chain for the demonstration videos.

Data Collection Rig - Step 1: Defining the parameters for this research study, planning experiments, and outlining the research roadmap

As I briefly talked about my thought process for deciding the experiment parameters and sourcing components in the introduction, I will thoroughly cover the progress of building the UV-applied plastic surface image sample (data) collection rig in this section.

The simple data collection rig is the first version of this research project, which helped me to ensure that all components, camera filters, UV light sources, and plastic materials (filaments) I chose were compatible and sufficient to produce an extensive UV-applied plastic surface image dataset with enough discrepancy (contrast) to train a visual anomaly detection model.

As mentioned, after meticulously inspecting the documentation of various commercially available camera sensors, I decided to employ the Raspberry Pi camera module 3 Wide (120°) to capture images of plastic surfaces, showcasing different surface defect stages, under varying UV wavelengths. I studied the spectral sensitivity of the CMOS 12-megapixel Sony IMX708 image sensor and other available Raspberry Pi camera modules on the official Raspberry Pi camera documentation.

Since I decided to benefit from external camera filters to capture UV-oriented image samples with enough discrepancy (contrast) in accordance with the inherent surface defects, instead of heavily modifying the Bayer layer and the integrated camera filters, I sourced nearly full-spectrum color gel filters with different light transmission levels for blocking visible light. By stacking up these color gel filters, I managed to capture accurate UV-induced plastic surface images in the dark.

- Godox color gel filters with low light transmission

- Godox color gel filters with medium light transmission

- Godox color gel filters with high light transmission

Of course, only utilizing visible light-blocking color gel filters was not enough, considering the extent of this research study. In this regard, I also sourced a precise glass UV bandpass filter absorbing the visible light spectrum. Although I inspected the glass bandpass filter specifications from a different brand's documentation, I was only able to purchase one from AliExpress.

- UV bandpass filter (25 mm glass ZWB ZB2)

As I did not want to constrain this research project to showcase only one UV light source type while experimenting with quality control conditions by the direct application of UV (ultraviolet radiation) to plastic object surfaces, I decided to purchase three different UV light sources providing different UV wavelength ranges.

- DFRobot UVC Ultraviolet Germicidal Lamp Strip (275 nm)

- DARKBEAM UV Flashlight (395 nm)

- DARKBEAM UV Flashlight (365 nm)

Since I decided to manufacture plastic objects myself to control experiment parameters to develop a valid research project, I needed to find an applicable and repeatable method to produce plastic objects with varying stages of surface defects (none, high, and extreme) and source different plastic materials to produce a wide selection of plastic objects. After mulling over different production methods, I decided to produce my plastic objects with 3D printing and modify slicer settings to inflict artificial but controllable surface defects. Thanks to commercially available filament types, including UV-sensitive and reflective ones, I was able to source a great variety of materials to construct an extensive image dataset of UV-applied plastic surfaces.

- ePLA-Matte Milky White

- ePLA-Matte Light Khaki

- eSilk-PLA White (Shiny)

- PLA+ Luminous Green (UV-reactive - Fluorescent)

- PLA+ Luminous Blue (UV-reactive - Fluorescent)

#️⃣ First, I designed a simple cube on Autodesk Fusion 360 with dimensions of 40.00 mm x 40.00 mm x 40.00 mm.

#️⃣ I exported the cube as an STL file and uploaded the exported STL file to Bambu Studio.

#️⃣ Then, I modified the slicer (Bambu Studio) settings to implement artificial surface defects, in other words, inflicted top-layer bonding issues.

#️⃣ Since I wanted to showcase three different surface defect stages — none, high, and extreme — I copied the cube three times on the slicer.

#️⃣ For all three cubes, I selected the sparse infill density as 10% to outline the inflicted surface defects.

#️⃣ I utilized the standard slicer settings for the first cube, depicting the none surface defect stage.

#️⃣ For the second cube, I reduced the top shell layer number to 0 and selected the top surface pattern as the monotonic line, representing the extreme surface defect stage.

#️⃣ For the third cube, I lowered the top shell layer number to 1 and selected the top surface pattern as the Hilbert curve, representing the high surface defect stage.

#️⃣ However, as shown in the print preview, only reducing the top shell layer number would not lead to a protruding high defect stage, as I had hoped. Thus, I also reduced the top shell thickness to 0 to get the results I anticipated.

#️⃣ Since I decided to add the matte light khaki filament latest, I sliced three khaki cubes with 15% sparse infill density to expand my plastic object sample size.

After meticulously printing the three cubes showcasing different surface defect stages with each filament, I produced all plastic objects (15 in total) required to construct an extensive dataset to train a visual anomaly detection model and develop my industrial-grade proof-of-concept surface defect detection mechanism.

Data Collection Rig - Step 2: Designing unique camera lenses compatible with UV bandpass filter and color gel filters

Since I wanted to utilize external filters not compatible with the camera module 3 Wide, I needed to design unique camera lenses housing the color gel filters and the glass UV bandpass filter. In the case of gel filters, I had to design the camera lens to make color gel filters hot swappable while experimenting with different light transmission levels - low, medium, and high. Conversely, in the case of the glass bandpass, I had to design the camera lens as rigid as possible to avoid any light reaching the image sensor without passing through the bandpass filter. On top of all of these lens requirements, I also had to make sure that the color gel and the UV bandpass filter lenses were easily changable during my experiments.

After sketching different lens arrangements, I decided to design a unique multi-part case for the camera module 3, which gave me the freedom to design lenses with minimal alterations to the base of the camera case and mount.

As I was working on these components, I leveraged some open-source CAD files to obtain accurate measurements:

✒️ Raspberry Pi Camera Module v3 (Step) | Inspect

✒️ Raspberry Pi 4 Model B (Step) | Inspect

#️⃣ First, I designed the camera module case, mount, and lens for the color gel filters on Fusion 360.

#️⃣ Since the camera module 3 Wide has a 120° ultra-wide angle of view (AOV), I aligned the focal point of the image sensor and the horizontal borders of the lens accordingly.

#️⃣ After completing the focal point alignment, I designed the glass UV bandpass filter lens by altering the color gel filter lens to protect the 120-degree horizontal border placement.

#️⃣ Since the camera module case is composed of stackable parts, it can be utilized without adding the filter lenses as a stand-alone case.

After completing the camera module case, mount, and lens designs, I started to work on the placement of the camera module in relation to the target plastic object surface and the applied UV light source. To capture a precise UV-exposed image highlighting as many plastic surface defects as possible, I needed to make sure that the camera module's image sensor (IMX708) would catch the reflected ultraviolet radiation as optimally as possible during my experiments.

In this regard, I needed to align the focal point of the camera sensor and the focal point of the applied UV light source on perpendicular axes, intersecting at the center of the UV-applied plastic surface. Since I selected UV light sources in the flashlight and strip formats, the most efficient way to place my light sources was to calculate the arc angle required to create a concave (converging) shape to focus the ultraviolet radiation emitted by the UVC strip (275 nm) directly to the center of the target plastic surface. By knowing the center (focal point) of the calculated arc, I could easily place the remaining UV flashlights directly pointed at the center of the target plastic object.

#️⃣ As I decided to place UV light sources ten centimeters (100 mm) away from plastic objects and knew the length of the UVC strip, I was able to calculate the required arc angle effortlessly via this formula:

S = r * θ

S ➡ Arc length [length of the UVC strip]

r ➡ Radius [distance between the center of the plastic object surface and the arc center (focal point)]

θ➡ Central angle in radians [angle/rad]

Arc_angle = (Arc_length * rad) / Radius

Data Collection Rig - Step 3: Designing the rig bases (stands) compatible with UV flashlights and strips

After calculating the arc angle, I continued to design the rig base compatible with the UVC strip, providing the concave (converging) shape to focus the emitted ultraviolet radiation directly onto the target plastic object surface. Based on the camera module mount, the rear part of the camera module case, I added a rack to the rig base to enable different levels (height adjustment) while attaching the camera module case (mount) to the rig base. In this regard, the rig base lets the user change the distance between the camera (image sensor) focal point and the target plastic object surface effortlessly.

I also added a simple holder to place the Raspberry Pi 4 on the top of the rig base easily while positioning the camera module case, providing hex-shaped snug-fit peg joints for easy installation.

As mentioned earlier, by knowing the center (focal point) of the calculated arc, I was able to modify the concave shape of the rig base for the UVC strip to design a subsequent rig base compatible with the remaining UV light sources in the flashlight format.

#️⃣ After completing the overall rig design, I exported all parts as STL files and uploaded them to Bambu Studio.

#️⃣ To boost the rigidity of the camera module case parts to produce a sturdy frame while experimenting with the external camera filters, I increased the wall loop (perimeter) number to 3.

#️⃣ To precisely place tree supports, I utilized support blockers while slicing the flashlight rig base.

Data Collection Rig - Step 4: Assembling the data collection rig and the custom camera filter lenses

After printing all of the data collection rig parts with my Bambu Lab A1 Combo, which helped me a lot while printing the plastic objects with different filaments, thanks to the integrated AMS lite.

#️⃣ First, I started to assemble the multi-part camera module case. Since I designed all case parts stackable to swap external camera filters without changing the case frame, I was able to assemble the whole case with four M3 screw-nut pairs.

#️⃣ Since I specifically designed the external color gel filter camera lens to make gel filters hot swappable while experimenting with different light transmission levels — low, medium, and high — I was able to affix the gel camera lens directly to the case frame.

#️⃣ After completing the assembly of the camera module case with the external gel filter lens, I connected the camera module 3 to the Raspberry Pi 4 via an FFC cable (150 mm) to test the fidelity of the captured images.

#️⃣ On the other hand, as discussed, I designed the external UV bandpass filter camera lens as rigid as possible to avoid any light reaching the image sensor without passing through the glass UV bandpass filter. Therefore, I diligently applied instant glue (super glue) to permanently affix the glass bandpass filter to its unique camera lens.

#️⃣ After installing M3 brass threaded inserts with my TS100 soldering iron to strengthen the connection between the rig bases and the Raspberry Pi 4 holder, I continued to attach the UV light sources to their respective rig bases.

#️⃣ As the 275 nm UVC strip (FPC circuit board) came with an adhesive tape side, I was able to fasten the UVC strip to the dedicated concave shape of the rig base effortlessly.

#️⃣ As I specifically designed the subsequent rig base considering the measurements of my UV light sources in the flashlight format (395 nm and 365 nm), the installation of UV flashlights was as easy as sliding them into their dedicated slot.

#️⃣ After installing the UV light sources into their respective rig bases successfully, to initiate my preliminary experiments, I attached the camera module case to the rack of the UV flashlight-compatible rig base by utilizing four M3 screw-nut pairs.

#️⃣ Then, I attached the Raspberry Pi 4 holder to the top of the rig base via M3 screws through the peg joints and placed the Raspberry Pi 4 onto its holder.

Data Collection Rig - Step 5: Setting up and programming Raspberry Pi 4 to capture images with the camera module 3 while logging the applied experiment parameters

As you might have noticed, I have always explained setting up the Raspberry Pi OS in my previous tutorials. Nonetheless, the latest version of the Raspberry Pi Imager is very straightforward to the point of letting the user configure the SSH authentication method and the Wi-Fi credentials. You can inspect the official Raspberry Pi Imager documentation here.

#️⃣ After setting up Raspberry Pi OS successfully, I installed the required Python modules (libraries) to continue developing.

sudo apt-get update

sudo apt-get install python3-opencv

#️⃣ After updating the system and installing the required libraries, I started to work on the Python script to capture UV-applied plastic surface image samples and allow the user to record the concurrent experiment parameters to the image file names by entering user inputs.

📁 uv_defect_detection_collect_data_w_rasp_4_camera_mod_wide.py

⭐ Include the required system and third-party libraries.

⭐ Uncomment to modify the libcamera log level to bypass the libcamera warnings if you want clean shell messages while entering user inputs.

import cv2

from picamera2 import Picamera2, Preview

from time import sleep

from threading import Thread

# Uncomment to disable libcamera warnings while collecting data.

#import os

#os.environ["LIBCAMERA_LOG_LEVELS"] = "4"#️⃣ To bundle all the functions to write a more concise script, I used a Python class.

⭐ In the __init__ function:

⭐ Define a picamera2 object for the Raspberry Pi camera module 3 Wide.

⭐ Define the output format and size (resolution) of the captured images to obtain an OpenCV-compatible buffer — RGB888. Then, configure the picamera2 object accordingly.

⭐ Initialize the video stream (feed) produced by the camera module 3.

⭐ Describe all possible experiment parameters in a Python dictionary for easy access.

class uv_defect_detection():

def __init__(self):

# Define the Raspberry Pi camera module 3 object.

self.picam2 = Picamera2()

# Define the camera module output format and size, considering OpenCV frame compatibility.

capture_config = self.picam2.create_preview_configuration(raw={}, main={"format":"RGB888", "size":(640,640)})

self.picam2.configure(capture_config)

# Initialize the camera module video stream (feed).

self.picam2.start()

sleep(2)

# Describe the UV-based surface anomaly detection parameters, including the object materials and the applied camera filter types.

self.uv_params = {

"cam_focal_surface_distance": ["3cm", "5cm"],

"uv_source_wavelength": ["275nm", "365nm", "395nm"],

"material": ["matte_white", "matte_khaki", "shiny_white", "fluorescent_blue", "fluorescent_green"],

"filter_type": ["gel_low_tr", "gel_medium_tr", "gel_high_tr", "uv_bandpass"],

"surface_defect": ["none", "high", "extreme"]

}

self.total_captured_sample_num = 0

...⭐ In the display_camera_feed function:

⭐ Obtain the latest frame generated by the camera module 3.

⭐ Then, show the obtained frame on the screen via the built-in OpenCV tools.

⭐ Stop the camera feed and terminate the OpenCV windows once requested.

def display_camera_feed(self):

# Display the real-time video stream (feed) produced by the camera module 3.

self.latest_frame = self.picam2.capture_array()

cv2.imshow("UV-based Surface Defect Detection Preview", self.latest_frame)

# Stop the camera feed once requested.

if cv2.waitKey(1) & 0xFF == ord('q'):

cv2.destroyAllWindows()

self.picam2.stop()

self.picam2.close()

print("\nCamera Feed Stopped!")⭐ In the camera_feed function, initiate the loop to show the latest frames consecutively to observe the real-time video stream (feed).

def camera_feed(self):

# Start the camera video stream (feed) loop.

while True:

self.display_camera_feed()⭐ In the save_uv_img_samples function:

⭐ Define the file name and path of the current image sample by applying the passed experiment parameters.

⭐ Up to the passed batch number, save the latest successive frames with the given file name and path, differentiated by the sample number.

⭐ Wait half a second before obtaining the next available frame.

def save_uv_img_samples(self, params, batch):

# Based on the provided UV parameters, create the image sample name.

img_file = "uv_samples/{}/{}_{}_{}_{}_{}".format(self.uv_params["surface_defect"][int(params[4])],

self.uv_params["cam_focal_surface_distance"][int(params[0])],

self.uv_params["uv_source_wavelength"][int(params[1])],

self.uv_params["material"][int(params[2])],

self.uv_params["filter_type"][int(params[3])],

self.uv_params["surface_defect"][int(params[4])]

)

# Save the latest frames captured by the camera module consecutively according to the passed batch number.

for i in range(batch):

self.total_captured_sample_num += 1

if (self.total_captured_sample_num > 30): self.total_captured_sample_num = 1

_img_file = img_file + "_{}.jpg".format(self.total_captured_sample_num)

cv2.imwrite(_img_file, self.latest_frame)

# Wait before getting the next available frame.

sleep(0.5)

print("UV-exposed Surface Image Sample Saved: " + _img_file)⭐ In the obtain_and_decode_input function:

⭐ Initiate the loop to obtain user inputs continuously.

⭐ Once the user input is fetched, decode the retrieved string to obtain the given experiment parameters as an array. Then, check the number of the extracted experiment parameters.

⭐ If matched, capture image samples up to the given batch number (10) and record the given experiment parameters to the sample file names.

def obtain_and_decode_input(self):

# Initiate the user input prompt to obtain the current UV parameters to capture image samples.

while True:

passed_params = input("Please enter the current UV parameters:")

# Decode the passed string to extract the provided UV parameters.

decoded_params = passed_params.split(",")

# Check the number of the given parameters.

if (len(decoded_params) == 5):

# If matched, capture image samples according to the passed batch number.

self.save_uv_img_samples(decoded_params, 10)

else:

print("Wrong parameters!")#️⃣ As the built-in Python input function needs to check for new user input without interruptions, it cannot run with the real-time video stream generated by OpenCV in the same operation (runtime), which processes the latest frames produced by the camera module 3 continuously. Therefore, I utilized the built-in Python threading module to run multiple operations concurrently and synchronize them.

⭐ Define the uv_defect_detection class object.

⭐ Declare and initialize a Python thread for running the real-time video stream (feed).

⭐ Outside of the video stream operation (thread), check new user inputs continuously to obtain the provided experiment parameters.

uv_defect_detection_obj = uv_defect_detection()

# Declare and initialize Python thread for the camera module video stream (feed).

Thread(target=uv_defect_detection_obj.camera_feed).start()

# Obtain the provided UV parameters as user input continuously.

uv_defect_detection_obj.obtain_and_decode_input()

Data Collection Rig - Step 6: Constructing an extensive image dataset of surfaces of various plastic materials with different defect states under 395 nm, 365 nm, and 275 nm UV wavelengths

After concluding programming the Raspberry Pi 4, I proceeded to capture UV-applied plastic surface image samples showcasing all of the combinations of the experiment parameters to construct my extensive dataset.

I would like to reiterate all experiment parameters to elucidate the extent of the completed image dataset.

#️⃣ I utilized three different UV light (radiation) sources, providing varying wavelength ranges.

- 275 nm

- 365 nm

- 395 nm

#️⃣ I designed three cubes showcasing different surface defect stages.

- none

- high

- extreme

#️⃣ I printed these three cubes with five different plastic materials (filaments) to increase my sample size.

- Matte White

- Matte Khaki

- Shiny (Silk) White

- UV-reactive White (Fluorescent Blue)

- UV-reactive White (Fluorescent Green)

#️⃣ I applied two different types of external camera filters, making it four different filter options due to the gel filters' light transmission levels.

- UV bandpass filter (glass)

- Gel filters with low light transmission

- Gel filters with medium light transmission

- Gel filters with high light transmission

#️⃣ I stacked up four different primary colors provided by my gel filter set to pass the required blue-oriented wavelength range and block the remaining visible light spectrums.

#️⃣ Since my color gel filter set included three gel filters for each primary color with varying light transmission levels, I decided to use low, medium, and high color gel filter groups, sets of four primary colors, during my experiments.

#️⃣ Since I specifically designed the rig base racks to be able to attach the camera module case mounts (carrying external lenses) at different height levels, I was able to adjust the distance between the camera (image sensor) focal point and the target plastic object surface. In this regard, I collected image samples at two different height levels to acquire samples with different zoom percentages.

- 3 cm

- 5 cm

#️⃣ Considering all of the mentioned experiment parameters, I painstakingly collected UV-applied plastic surface image samples with every possible combination and constructed my extensive dataset successfully.

- none / 3 cm / 395 nm / Gel (low transmission)

- high / 3 cm / 395 nm / Gel (low transmission)

- extreme / 3 cm / 395 nm / Gel (low transmission)

- none / 3 cm / 395 nm / Gel (medium transmission)

- high / 3 cm / 395 nm / Gel (medium transmission)

- extreme / 3 cm / 395 nm / Gel (medium transmission)

- none / 3 cm / 395 nm / Gel (high transmission)

- high / 3 cm / 395 nm / Gel (high transmission)

- extreme / 3 cm / 395 nm / Gel (high transmission)

- none / 3 cm / 395 nm / UV bandpass

- high / 3 cm / 395 nm / UV bandpass

- extreme / 3 cm / 395 nm / UV bandpass

- none / 3 cm / 365 nm / Gel (low transmission)

- high / 3 cm / 365 nm / Gel (low transmission)

- extreme / 3 cm / 365 nm / Gel (low transmission)

- none / 3 cm / 365 nm / Gel (medium transmission)

- high / 3 cm / 365 nm / Gel (medium transmission)

- extreme / 3 cm / 365 nm / Gel (medium transmission)

- none / 3 cm / 365 nm / Gel (high transmission)

- high / 3 cm / 365 nm / Gel (high transmission)

- extreme / 3 cm / 365 nm / Gel (high transmission)

- none / 3 cm / 365 nm / UV bandpass

- high / 3 cm / 365 nm / UV bandpass

- extreme / 3 cm / 365 nm / UV bandpass

- none / 3 cm / 275 nm / Gel (low transmission)

- high / 3 cm / 275 nm / Gel (low transmission)

- extreme / 3 cm / 275 nm / Gel (low transmission)

- none / 3 cm / 275 nm / Gel (medium transmission)

- high / 3 cm / 275 nm / Gel (medium transmission)

- extreme / 3 cm / 275 nm / Gel (medium transmission)

- none / 3 cm / 275 nm / Gel (high transmission)

- high / 3 cm / 275 nm / Gel (high transmission)

- extreme / 3 cm / 275 nm / Gel (high transmission)

- none / 3 cm / 275 nm / UV bandpass

- high / 3 cm / 275 nm / UV bandpass

- extreme / 3 cm / 275 nm / UV bandpass

- none / 5 cm / 395 nm / Gel (low transmission)

- high / 5 cm / 395 nm / Gel (low transmission)

- extreme / 5 cm / 395 nm / Gel (low transmission)

- none / 5 cm / 395 nm / Gel (medium transmission)

- high / 5 cm / 395 nm / Gel (medium transmission)

- extreme / 5 cm / 395 nm / Gel (medium transmission)

- none / 5 cm / 395 nm / Gel (high transmission)

- high / 5 cm / 395 nm / Gel (high transmission)

- extreme / 5 cm / 395 nm / Gel (high transmission)

- none / 5 cm / 395 nm / UV bandpass

- high / 5 cm / 395 nm / UV bandpass

- extreme / 5 cm / 395 nm / UV bandpass

- none / 5 cm / 365 nm / Gel (low transmission)

- high / 5 cm / 365 nm / Gel (low transmission)

- extreme / 5 cm / 365 nm / Gel (low transmission)

- none / 5 cm / 365 nm / Gel (medium transmission)

- high / 5 cm / 365 nm / Gel (medium transmission)

- extreme / 5 cm / 365 nm / Gel (medium transmission)

- none / 5 cm / 365 nm / Gel (high transmission)

- high / 5 cm / 365 nm / Gel (high transmission)

- extreme / 5 cm / 365 nm / Gel (high transmission)

- none / 5 cm / 365 nm / UV bandpass

- high / 5 cm / 365 nm / UV bandpass

- extreme / 5 cm / 365 nm / UV bandpass

- none / 5 cm / 275 nm / Gel (low transmission)

- high / 5 cm / 275 nm / Gel (low transmission)

- extreme / 5 cm / 275 nm / Gel (low transmission)

- none / 5 cm / 275 nm / Gel (medium transmission)

- high / 5 cm / 275 nm / Gel (medium transmission)

- extreme / 5 cm / 275 nm / Gel (medium transmission)

- none / 5 cm / 275 nm / Gel (high transmission)

- high / 5 cm / 275 nm / Gel (high transmission)

- extreme / 5 cm / 275 nm / Gel (high transmission)

- none / 5 cm / 275 nm / UV bandpass

- high / 5 cm / 275 nm / UV bandpass

- extreme / 5 cm / 275 nm / UV bandpass

#️⃣ As shown in the Python script documentation, I generated separate folders for each defect stage and recorded the applied experiment parameters to the image file names to produce a self-explanatory dataset for training a valid visual anomaly detection model.

- /none (3600 samples)

- /high (3600 samples)

- /extreme (3600 samples)

Since I thought this dataset might be beneficial for different materials science projects, I wanted to make it open-source for anyone interested in training a neural network model with my samples or adding them to their existing project. Please refer to the project GitHub repository to examine the UV-applied plastic surface image dataset.

📌 Inspecting gel filters

🔎 3 cm / 395 nm / Gel (low transmission)

🔎 3 cm / 395 nm / Gel (medium transmission)

🔎 3 cm / 395 nm / Gel (high transmission)

🔎 3 cm / 365 nm / Gel (low transmission)

🔎 5 cm / 395 nm / Gel (low transmission)

🔎 5 cm / 365 nm / Gel (low transmission)

🔎 3 cm / 275 nm / Gel (low transmission)

🔎 5 cm / 275 nm / Gel (low transmission)

🔎 3 cm / 395 nm / UV bandpass

🔎 3 cm / 365 nm / UV bandpass

🔎 5 cm / 395 nm / UV bandpass

🔎 5 cm / 365 nm / UV bandpass

🔎 3 cm / 275 nm / UV bandpass

🔎 5 cm / 275 nm / UV bandpass

🖥️ Real-time video stream on Raspberry Pi 4 while collecting image samples

Circular Conveyor - Step 0: Migrating project from Raspberry Pi 4 to Raspberry Pi 5 to utilize two different camera module 3 versions (regular Wide and NoIR Wide) simultaneously

After successfully concluding my experiments with the data collection rig and constructing the UV-applied plastic surface image dataset with enough discrepancy (contrast) to train a visual anomaly detection model, I started to work on developing the industrial-grade proof-of-concept circular conveyor mechanism to explore different aspects of utilizing the substantial data I was collecting in a real-world manufacturing setting.

After training and building my FOMO-AD (visual anomaly detection model) on Edge Impulse Studio successfully — the training process is explained in the following step — I came to the conclusion that utilizing only the camera module with which I constructed my dataset was not applicable for a real-world scenario since camera types and attributes differ in manufacturing settings. Thus, to review my visual anomaly detection model's behaviour with image samples generated by a different camera type, I decided to add a secondary camera to my mechanism. As the secondary camera, I selected the NoIR version of the Raspberry Pi camera module 3, which is based on the same IMX708 image sensor but has no integrated IR filter, producing distinctly different UV-induced image samples than the regular Wide module, but with the same procedure.

In this regard, I decided to migrate my project from the Raspberry Pi 4 to the Raspberry Pi 5 since I wanted to capitalize on the Pi 5’s dual-CSI ports, which allowed me to utilize two different types of camera modules (regular Wide and NoIR Wide) simultaneously and develop a feature-rich industrial-grade surface defect detection mechanism employing a regular camera and a night-vision camera.

#️⃣ Similar to the Raspberry Pi 4, after setting up the Raspberry Pi OS on the Raspberry Pi 5 via the Raspberry Pi Imager, I installed the required Python modules (libraries) to continue developing.

sudo apt-get update

sudo apt-get install python3-opencv

#️⃣ Contrary to the Raspberry Pi 4, the dual CSI ports of the Raspberry Pi 5 are not compatible with FFC cables. Thus, I purchased official FPC connector cables (300 mm and 500 mm) to attach the regular Wide and the NoIR Wide camera modules to the respective CSI ports.

#️⃣ Before proceeding with developing my circular conveyor mechanism with the dual camera setup, I needed to establish the workflow for running both cameras simultaneously. Thus, I decided to modify my previous Python script for capturing UV-applied plastic surface image samples with the camera module 3 Wide.

Even though I programmed the Raspberry Pi 5 to capture image samples produced by two different camera modules simultaneously, I did not expand my dataset or retrain the model with images generated by the camera module 3 NoIR Wide, as I wanted to study my model's behaviour while running inferences in a different manufacturing setting.

📁 uv_defect_detection_collect_data_w_rasp_5_camera_mod_wide_and_noir.py

⭐ Include the required system and third-party libraries.

⭐ Uncomment to modify the libcamera log level to bypass the libcamera warnings if you want clean shell messages while entering user inputs.

import cv2

from picamera2 import Picamera2, Preview

from time import sleep

from threading import Thread

# Uncomment to disable libcamera warnings while collecting data.

#import os

#os.environ["LIBCAMERA_LOG_LEVELS"] = "4"#️⃣ To bundle all the functions to write a more concise script, I used a Python class.

⭐ In the __init__ function:

⭐ Define a picamera2 object addressing the CSI port of the Raspberry Pi camera module 3 Wide.

⭐ Define the output format and size (resolution) of the images captured by the regular camera module 3 to obtain an OpenCV-compatible buffer — RGB888. Then, configure the picamera2 object accordingly.

⭐ Initialize the video stream (feed) produced by the regular camera module 3.

⭐ Define a secondary picamera2 object addressing the CSI port of the Raspberry Pi camera module 3 NoIR Wide.

⭐ Define the output format and size (resolution) of the images captured by the camera module 3 NoIR to obtain an OpenCV-compatible buffer — RGB888. Then, configure the picamera2 object accordingly.

⭐ Initialize the video stream (feed) produced by the camera module 3 NoIR.

⭐ Describe all possible experiment parameters in a Python dictionary for easy access.

⭐ Define the camera attributes and respective total sample numbers for the concurrent data collection process.

class uv_defect_detection():

def __init__(self):

# Define the Picamera2 object for communicating with the Raspberry Pi camera module 3 Wide.

self.cam_wide = Picamera2(0)

# Define the camera module frame output format and size, considering OpenCV frame compatibility.

capture_config = self.cam_wide.create_preview_configuration(raw={}, main={"format":"RGB888", "size":(640,640)})

self.cam_wide.configure(capture_config)

# Initialize the camera module continuous video stream (feed).

self.cam_wide.start()

sleep(2)

# Define the Picamera2 object for communicating with the Raspberry Pi camera module 3 NoIR Wide.

self.cam_noir_wide = Picamera2(1)

# Define the camera module NoIR frame output format and size, considering OpenCV frame compatibility.

capture_config_noir = self.cam_wide.create_preview_configuration(raw={}, main={"format":"RGB888", "size":(640,640)})

self.cam_noir_wide.configure(capture_config_noir)

# Initialize the camera module NoIR continuous video stream (feed).

self.cam_noir_wide.start()

sleep(2)

# Describe the surface anomaly detection conditions based on UV-exposure, including plastic material types, applied UV wavelengths, and the employed camera filter categories.

self.uv_params = {

"cam_focal_surface_distance": ["3cm", "5cm"],

"uv_source_wavelength": ["275nm", "365nm", "395nm"],

"material": ["matte_white", "matte_khaki", "shiny_white", "fluorescent_blue", "fluorescent_green"],

"filter_type": ["gel_low_tr", "gel_medium_tr", "gel_high_tr", "uv_bandpass"],

"surface_defect": ["none", "high", "extreme"]

}

# Define the required camera information for the data collection process.

self.active_cam_info = [{"name": "wide", "total_captured_sample_num": 0}, {"name": "wide_noir", "total_captured_sample_num": 0}]

...⭐ In the display_camera_feeds function:

⭐ Obtain the latest frame generated by the regular camera module 3.

⭐ Show the obtained frame on the screen via the built-in OpenCV tools.

⭐ Then, obtain the latest frame produced by the camera module 3 NoIR and show the retrieved frame in a separate window on the screen via the built-in OpenCV tools.

⭐ Stop both camera feeds (regular Wide and NoIR Wide) and terminate individual OpenCV windows once requested.

def display_camera_feeds(self):

# Display the real-time video stream (feed) produced by the camera module 3 Wide.

self.latest_frame_wide = self.cam_wide.capture_array()

cv2.imshow("UV-based Surface Defect Detection [Wide Preview]", self.latest_frame_wide)

# Display the real-time video stream (feed) produced by the camera module 3 NoIR Wide.

self.latest_frame_noir = self.cam_noir_wide.capture_array()

cv2.imshow("UV-based Surface Defect Detection [NoIR Preview]", self.latest_frame_noir)

# Stop all camera feeds once requested.

if cv2.waitKey(1) & 0xFF == ord('q'):

cv2.destroyAllWindows()

self.cam_wide.stop()

self.cam_wide.close()

print("\nWide Camera Feed Stopped\n")

self.cam_noir_wide.stop()

self.cam_noir_wide.close()

print("\nWide NoIR Camera Feed Stopped!\n")⭐ In the camera_feeds function, initiate the loop to show the latest frames produced by the regular Wide and NoIR Wide camera modules consecutively to observe the real-time video streams (feeds) simultaneously.

def camera_feeds(self):

# Start the camera video streams (feeds) in a loop.

while True:

self.display_camera_feeds()⭐ In the save_uv_img_samples function:

⭐ Define the file name and path of the current image sample by applying the passed experiment parameters.

⭐ The given parameters also determine whether the latest frame should be obtained from the regular camera module or the NoIR camera module.

⭐ Up to the passed batch number, save the latest successive frames generated by the selected camera module (regular or NoIR) with the given file name and path, differentiated by the sample number.

⭐ Wait half a second before obtaining the next available frame.

def save_uv_img_samples(self, params, batch):

# Based on the provided UV-based anomaly detection conditions and the selected camera type, generate the given image sample path and partial file name.

selected_cam = self.active_cam_info[int(params[5])]["name"]

img_file = "uv_samples/{}/{}/{}_{}_{}_{}_{}".format(

selected_cam,

self.uv_params["surface_defect"][int(params[4])],

self.uv_params["cam_focal_surface_distance"][int(params[0])],

self.uv_params["uv_source_wavelength"][int(params[1])],

self.uv_params["material"][int(params[2])],

self.uv_params["filter_type"][int(params[3])],

self.uv_params["surface_defect"][int(params[4])]

)

# Save the latest frames captured by the selected camera type — the camera module 3 Wide or the camera module 3 NoIR Wide — consecutively according to the passed batch number.

for i in range(batch):

self.active_cam_info[int(params[5])]["total_captured_sample_num"] += 1

if (self.active_cam_info[int(params[5])]["total_captured_sample_num"] > 30): self.active_cam_info[int(params[5])]["total_captured_sample_num"] = 1

_img_file = img_file + "_{}.jpg".format(self.active_cam_info[int(params[5])]["total_captured_sample_num"])

if(selected_cam == "wide"):

cv2.imwrite(_img_file, self.latest_frame_wide)

elif(selected_cam == "wide_noir"):

cv2.imwrite(_img_file, self.latest_frame_noir)

# Wait before getting the next available frame.

sleep(0.5)

print("UV-exposed Surface Image Sample Saved [" + selected_cam + "]: " + _img_file)⭐ In the obtain_and_decode_input function:

⭐ Initiate the loop to obtain user inputs continuously.

⭐ Once the user input is fetched, decode the retrieved string to obtain the given experiment parameters as an array. Then, check the number of the extracted experiment parameters.

⭐ If matched, capture image samples up to the given batch number (10) with the selected camera module and record the given experiment parameters to the sample file names.

def obtain_and_decode_input(self):

# Initiate the user input prompt to obtain the given UV-exposure conditions for the data collection process.

while True:

passed_params = input("Please enter the current UV-exposure conditions:")

# Decode the passed string to extract the provided parameters.

decoded_params = passed_params.split(",")

# Check the number of the extracted parameters.

if (len(decoded_params) == 6):

# If matched, capture image samples according to the passed batch number — 10.

self.save_uv_img_samples(decoded_params, 10)

else:

print("Incorrect parameter number!")#️⃣ As the built-in Python input function needs to check for new user input without interruptions, it cannot run with the real-time video streams generated by OpenCV in the same operation (runtime), which processes the latest frames produced by the regular Wide and NoIR Wide camera modules continuously. Therefore, I utilized the built-in Python threading module to run multiple operations concurrently and synchronize them.

⭐ Define the uv_defect_detection class object.

⭐ Declare and initialize a Python thread for running the real-time video streams (feeds) produced by the regular camera module 3 and the camera module 3 NoIR.

⭐ Outside of the video streams operation (thread), check new user inputs continuously to obtain the provided experiment parameters.

uv_defect_detection_obj = uv_defect_detection()

# Declare and initialize a Python thread for the camera module 3 Wide and the camera module 3 NoIR Wide video streams (feeds).

Thread(target=uv_defect_detection_obj.camera_feeds).start()

# Obtain the provided UV-exposure conditions as user input continuously.

uv_defect_detection_obj.obtain_and_decode_input()

Circular Conveyor - Step 1: Building a visual anomaly detection model (FOMO-AD) w/ Edge Impulse Enterprise

Since Edge Impulse provides developer-friendly tools for advanced AI applications and supports almost every development board due to its model deployment options, I decided to utilize Edge Impulse Enterprise to build my visual anomaly detection model. Also, Edge Impulse Enterprise incorporates elaborate model architectures for advanced computer vision applications and optimizes the state-of-the-art vision models for edge devices and single-board computers such as the Raspberry Pi 5.

Among the diverse machine learning algorithms provided by Edge Impulse, I decided to employ FOMO-AD (visual anomaly detection), which is specifically developed for handling unseen data, like defects in a product during manufacturing.

While labeling the UV-applied plastic surface image samples, I needed to utilize the default classes required by Edge Impulse to enable the F1 score calculation:

- no anomaly

- anomaly

Plausibly, Edge Impulse Enterprise enables developers with advanced tools to build, optimize, and deploy each available machine learning algorithm as supported firmware for nearly any device you can think of. Therefore, after training and validating, I was able to deploy my FOMO-AD model as an EIM binary for Linux (AARCH64) compatible with Raspberry Pi 5.

To utilize the advanced AI tools provided by Edge Impulse, you can register here.

Furthermore, you can inspect this FOMO-AD visual anomaly detection model on Edge Impulse as a public project.

Circular Conveyor - Step 1.1: Uploading and labeling the UV-applied plastic surface image samples

#️⃣ First, I created a new project on my Edge Impulse Enterprise account.

#️⃣ To label image samples manually for FOMO-AD visual anomaly detection models, go to Dashboard ➡ Project info ➡ Labeling method and select One label per data item.

#️⃣ To upload training and testing UV-applied plastic surface image samples as individual files, I opened the Data acquisition section and clicked the Upload data icon.

#️⃣ I utilized default Edge Impulse configurations to distinguish training and testing image samples to enable the F1 score calculation.

#️⃣ For training samples, I selected the Training category and entered no anomaly as their shared label.

#️⃣ For testing samples, I selected the Testing category and entered anomaly as their shared label.

As I wanted this visual anomaly detection model to represent all of my experiments, I uploaded all image samples with the none surface defect stage as the training samples and all image samples with the extreme surface defect stage as the testing samples.

- /none (3600 samples)

- /extreme (3600 samples)

Circular Conveyor - Step 1.2: Training the FOMO-AD (visual anomaly detection) model

An impulse (an application developed and optimized by Edge Impulse) takes raw data, applies signal processing to extract features, and then utilizes a learning block to classify new data.

For my application, I created the impulse by employing the Image processing block and the Visual Anomaly Detection - FOMO-AD learning block.

Image processing block processes the passed raw image input as grayscale or RGB (optional) to produce a reliable features array.

FOMO-AD learning block represents the officially supported machine learning algorithms, based on a selectable backbone for feature extraction and a scoring function (PatchCore, GMM anomaly detection).

#️⃣ First, I opened the Impulse design ➡ Create impulse section, set the model image resolution to 320 x 320, and selected the Fit shortest axis resize mode so as to scale (resize) the given image samples precisely. To complete the impulse creation, I clicked Save Impulse.

#️⃣ To modify the raw image features in the applicable format, I navigated to the Impulse design ➡ Image section, set the Color depth parameter as RGB, and clicked Save parameters.

#️⃣ Then, I proceeded to click Generate features to extract the required features for training by applying the Image processing block.

#️⃣ After extracting features successfully, I navigated to the Impulse design ➡ Visual Anomaly Detection section and modified the neural network settings and architecture to achieve reliable accuracy and validity.

#️⃣ First, I selected the Training processor as GPU since I uploaded an extensive dataset providing more than 3000 training image samples.

#️⃣ According to my prolonged experiments, I assigned the final model settings as follows.

📌 Training settings:

- Training processor ➡ GPU

- Capacity ➡ High

📌 Neural network architecture:

- MobileNetV2 0.35

- Gaussian Mixture Model (GMM)

#️⃣ Adjusting Capacity higher means a higher number of (Gaussian) components, making the visual anomaly detection model more adapted to the original distribution.

#️⃣ After training the model with the final configurations, Edge Impulse did not evaluate the F1 score (accuracy) due to the nature of the visual anomaly model training process.

Circular Conveyor - Step 1.3: Evaluating the model accuracy and deploying the validated model

Testing the FOMO-AD visual anomaly detection models is extremely salient for getting precise results while running inferences on the device. In addition to evaluating the F1 precision score (accuracy), Edge Impulse allows the user to tweak the learning block sensitivity by adjusting the anomaly (confidence) threshold, resulting in a much more adaptable model for real-world operations.

#️⃣ First, to obtain the validation score of the trained model based on the provided testing samples, I navigated to the Impulse design ➡ Model testing section and clicked Classify all.

#️⃣ Based on the initial F1 score, I started to rigorously experiment with different model variants and anomaly (confidence) thresholds to pinpoint the optimum settings for the real-world conditions.

#️⃣ Although Edge Impulse suggested 7.3 as the confidence threshold based on the top anomaly scores in the training dataset, it performed poorly for the Unoptimized (float32) model variant. According to my experiments, I found out that a 2.12 confidence threshold is the sweet spot for the unoptimized version, leading to an 83.74% F1 score (accuracy).

#️⃣ On the other hand, the Quantized (int8) model variant performed best with an 8 confidence threshold, leading to a 100% F1 score (accuracy).

#️⃣ To deploy the validated model optimized for my hardware, I navigated to the Impulse design ➡ Deployment section and searched for Linux(AARCH64).

#️⃣ I chose the Quantized (int8) model variant (optimization) to achieve the optimal performance while running the deployed model.

#️⃣ Finally, I clicked Build to download the produced EIM binary, containing the trained visual anomaly detection model.

Circular Conveyor - Step 2: Setting up Apache web server with MariaDB database and Edge Impulse Linux Python SDK on Raspberry Pi 5

As mentioned earlier, I decided to develop a web dashboard for the circular conveyor mechanism and host it locally on the Raspberry Pi 5. Thus, I decided to utilize Apache as the local server for my web dashboard, providing all necessary tools to build a full-fledged PHP-based application.

To easily access and run my FOMO-AD visual anomaly detection model (EIM binary) via a Python script, I also installed the Edge Impulse Linux Python SDK on the Raspberry Pi 5.

#️⃣ First, I installed the Apache web server with a MariaDB database, the PHP MySQL package, and the PHP cURL package via the terminal.

sudo apt-get install apache2 php mariadb-server php-mysql php-curl -y

#️⃣ To utilize the MariaDB database, I set the root user by strictly following the secure installation prompt.

sudo mysql_secure_installation

#️⃣ After setting up the Apache server, I proceeded to install the official Edge Impulse Python SDK with all dependencies.

sudo apt-get install libatlas-base-dev libportaudio2 libportaudiocpp0 portaudio19-dev python3-pip

sudo pip3 install pyaudio edge_impulse_linux --break-system-packages

#️⃣ Since I did not create a virtual environment, I needed to utilize the break-system-packages command-line argument to bypass the system-wide package installation error.

As discussed earlier, I decided to design a unique controller board (PCB) for the circular conveyor mechanism in the form of a Raspberry Pi 5 shield (hat). Since the controller board would be based on an ATmega328P, I decided to establish the data transfer via serial communication. In this regard, before prototyping the circular conveyor interface, I needed to enable the UART serial communication protocol on the Raspberry Pi 5.

#️⃣ To activate the UART serial communication via GPIO pins, I enabled the Serial Port interface on Raspberry Pi Configuration. Then, I rebooted the Pi 5.

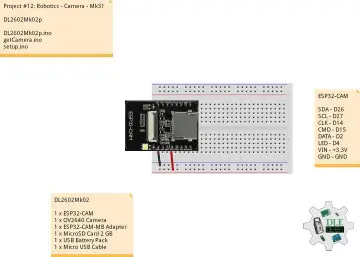

Circular Conveyor - Step 3: Prototyping and initial programming of the circular conveyor interface with Arduino Uno

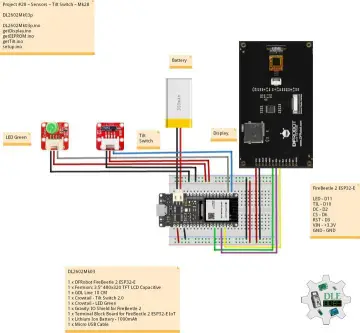

Before proceeding with developing the mechanical parts and the controller board (interface) of the circular conveyor mechanism, I needed to ensure that every sensor and component was operating as anticipated. In this regard, I decided to utilize an Arduino Uno to prototype the circular conveyor interface. Since I had an original Arduino Uno, which is based on the ATmega328P, I was able to test and run my initial programming of the conveyor interface effortlessly.

#️⃣ As I decided to design two conveyor drivers sharing the load while rotating the conveyor chain, I utilized two Nema 17 (17HS3401) stepper motors controlled by two separate A4988 driver modules.

#️⃣ Since I wanted to utilize neodymium magnets to align the center of the plastic object surfaces held by the plastic object carriers of the circular conveyor with the focal points of both camera modules (regular Wide and NoIR Wide), I used two magnetic Hall-effect sensor modules (KY-003).

#️⃣ To provide the user with a feature-rich interface, I connected an SSD1306 OLED display and four control buttons.

#️⃣ To enable the user to adjust the conveyor attributes manually, I added two long-shaft potentiometers.

#️⃣ Since I needed to supply power for a lot of current-demanding electronic components with different operating voltages, I decided to convert my old ATX power supply unit (PSU) into a simple bench power supply by utilizing an ATX adapter board (XH-M229) providing stable 3.3V, 5V, and 12V. For each power output of the adapter board, I soldered wires to attach a DC-barrel-to-wire jack (male) in order to create a production-ready bench power supply.

#️⃣ Furthermore, as a part of my initial programming experiments, I reviewed the data transmission between the software serial port of the Arduino Uno and the hardware UART serial port (GPIO) of the Raspberry Pi 5.

#️⃣ Since Arduino Uno and ATmega328P operate at 5V while Raspberry Pi 5 requires 3.3V logic level voltage, their GPIO pins cannot be connected directly, even for serial communication. Therefore, I utilized a bi-directional logic level converter to shift the voltage between the respective pin connections.

Circular Conveyor - Step 3.1: Setting up and configuring ATMEGA328P-PU as an Arduino Uno

After completing my initial Arduino Uno prototyping and programming, I started to set up my ATmega328P-PU to be able to move electrical components from the Arduino Uno to its corresponding pins to continue developing the circular conveyor interface.

#️⃣ First, based on the ATmega328P datasheet, I built the required circuitry to drive the ATmega328P single-chip microcontroller, consisting of these electrical components:

- 16.000 MHz crystal [1]

- 10K resistor [1]

- 22pF ceramic disc capacitor [2]

- 10uF 250v electrolytic capacitor [1]

#️⃣ Since I did not want to add an onboard USB port to my PCB, I decided to upload code files to the ATmega328P via an external FTDI adapter (programming board), which requires an additional 100nF ceramic disc capacitor while connecting its DTR/RTS pin to the reset pin of the ATmega328P.

📌 DroneBot Workshop provided in-depth written and video tutorials regarding utilizing the ATmega328P and the FTDI adapter, from which I got the connection schematics above. So, please refer to DroneBot Workshop's tutorial to get more information about the ATmega328P microcontroller.

Since I wanted to program the ATmega328P as an Arduino Uno via the Arduino IDE, I purchased ATmega328P-PU chips, which come with the preloaded (burned) Arduino bootloader in their EEPROM. Nonetheless, any of my ATmega328P chips with the PU version had been recognized by the latest version of Arduino IDE — 2.3.6.

Therefore, I needed to burn the required bootloader manually to my ATmega328P-PU by employing a different Arduino Uno, other than the one I used to prototype the conveyor interface, as an in-system program (ISP), as depicted in this official Arduino guideline.

#️⃣ First, I connected the Arduino Uno to the computer and selected its COM port on the Arduino IDE.

#️⃣ Then, I navigated to File ➡ Examples ➡ ArduinoISP and uploaded the ArduinoISP example to the Arduino UNO.

#️⃣ Since the ISP example uses the SPI protocol to burn the bootloader, I connected the hardware SPI pins (MISO, MOSI, and SCK) of the Arduino Uno to the corresponding SPI pins of the ATmega328P.

#️⃣ I also connected pin 10 to the ATmega328P reset pin since the ISP example uses D10 to reset the target microcontroller, rather than the SS pin.

- MOSI (D11) ➡ 17

- MISO (D12) ➡ 18

- SCK (D13) ➡ 19

- D10 ➡ 1

#️⃣ After connecting the Arduino Uno SPI pins to the ATmega328P SPI pins, I selected Tools ➡ Programmer ➡ Arduino as ISP. Then, I selected the board as Arduino Uno since I wanted to burn the Arduino Uno bootloader to the target ATmega328P chip.

#️⃣ After configuring bootloader settings, I clicked Tools ➡ Burn Bootloader to initiate the bootloader burning procedure.

#️⃣ After burning the Arduino Uno bootloader to my ATmega328P chip successfully, I uploaded a simple program via the external FTDI adapter to test whether the ATmega328P chip behaves as an Arduino Uno.

#️⃣ Once I confirmed the ATmega328P worked as an Arduino Uno, I connected a button to its reset pin and GND in order to restart my program effortlessly in case of logic errors.

#️⃣ Finally, I migrated all of the electrical components to the ATmega328P, considering its pin names equivalent to the Arduino Uno's.

// Connections

// ATMEGA328P-PU :

// Nema 17 (17HS3401) Stepper Motor w/ A4988 Driver Module [Motor 1]

// 5V ------------------------ VDD

// GND ------------------------ GND

// D2 ------------------------ DIR

// D3 ------------------------ STEP

// Nema 17 (17HS3401) Stepper Motor w/ A4988 Driver Module [Motor 2]

// 5V ------------------------ VDD

// GND ------------------------ GND

// D4 ------------------------ DIR

// D5 ------------------------ STEP

// SSD1306 OLED Display (128x64)

// 5V ------------------------ VCC

// GND ------------------------ GND

// A4 ------------------------ SDA

// A5 ------------------------ SCL

// Raspberry Pi 5

// D6 (RX) ------------------------ GPIO 14 (TXD)

// D7 (TX) ------------------------ GPIO 15 (RXD)

// Magnetic Hall Effect Sensor Module (KY-003) [First]

// GND ------------------------ -

// 5V ------------------------ +

// A0 ------------------------ S

// Magnetic Hall Effect Sensor Module (KY-003) [Second]

// GND ------------------------ -

// 5V ------------------------ +

// A1 ------------------------ S

// Long-shaft B4K7 Potentiometer (Speed)

// A2 ------------------------ Signal

// Long-shaft B4K7 Potentiometer (Station)

// A3 ------------------------ Signal

// Control Button (A)

// D8 ------------------------ +

// Control Button (B)

// D9 ------------------------ +

// Control Button (C)

// D10 ------------------------ +

// Control Button (D)

// D11 ------------------------ +

Circular Conveyor - Step 4: Programming ATMEGA328P-PU as the circular conveyor interface

To prepare monochromatic images in order to display custom logos on the SSD1306 OLED screen, I followed this process.

#️⃣ First, I converted monochromatic bitmaps to compatible C data arrays by utilizing LCD Assistant.

#️⃣ Based on the SSD1306 screen type, I selected the Horizontal byte orientation.

#️⃣ After converting all logos successfully, I created a header file — logo.h — to store them.

#️⃣ I installed the libraries required to control the attached electronic components:

📚 SoftwareSerial (built-in) | Inspect

📚 Adafruit_SSD1306 | Download

📚 Adafruit-GFX-Library | Download

📁 ai_driven_surface_defect_detection_circular_sprocket_conveyor.ino

⭐ Include the required libraries.

#include <SoftwareSerial.h>

#include <Adafruit_GFX.h>

#include <Adafruit_SSD1306.h>⭐ Import custom logos (C data arrays).

#include "logo.h"⭐ Declare a software serial port to communicate with Raspberry Pi 5.

SoftwareSerial rasp_pi_5 (6, 7); // RX, TX⭐ Define the SSD1306 display configurations and declare the SSD1306 class instance.

#define SCREEN_WIDTH 128 // OLED display width, in pixels

#define SCREEN_HEIGHT 64 // OLED display height, in pixels

#define OLED_RESET -1 // Reset pin # (or -1 if sharing Arduino reset pin)

Adafruit_SSD1306 display(SCREEN_WIDTH, SCREEN_HEIGHT, &Wire, OLED_RESET);⭐ Define the analog pins for the Hall-effect sensor modules (KY-003).

#define first_hall_effect_sensor A0

#define second_hall_effect_sensor A1⭐ Define the digital pins for the control buttons.

#define control_button_A 8

#define control_button_B 9

#define control_button_C 10

#define control_button_D 11⭐ Declare all of the variables required by the circular conveyor drivers by creating a struct.

struct stepper_config{

#define m_num 2

int _pins[m_num][2] = {{2, 3}, {4, 5}}; // (DIR, STEP)

// Assign the required revolution and initial speed variables based on drive sprocket conditions.

int stepsPerRevolution = 200;

int sprocket_speed = 12000;

// Assign stepper motor tasks based on the associated part.

int sprocket_1 = 0, sprocket_2 = 1;

// Declare the circular conveyor station pending time for each inference session.

int station_pending_time = 5000;

// Define the necessary potentiometer configurations for adjusting the sprocket speed and the station pending time.

int pot_speed_pin = A2, pot_speed_min = 8000, pot_speed_max = 25000;

int pot_pending_pin = A3, pot_pending_min = 3000, pot_pending_max = 30000;

};⭐ Initiate the declared software serial port with its assigned RX and TX pins to start the data transmission process with the Raspberry Pi 5.

rasp_pi_5.begin(9600);⭐ Activate the assigned DIR and STEP pins connected to the A4988 driver modules, controlling the Nema 17 stepper motors.

for(int i = 0; i < m_num; i++){ pinMode(stepper_config._pins[i][0], OUTPUT); pinMode(stepper_config._pins[i][1], OUTPUT); }⭐ Initialize the SSD1306 class instance.

display.begin(SSD1306_SWITCHCAPVCC, 0x3C);

display.display();

delay(1000);⭐ In the show_screen function, program different screen layouts (interfaces) based on the ongoing conveyor operation, the given user commands, and the real-time sensor readings.

void show_screen(char _type, int _opt){

// According to the given parameters, show the requested screen type on the SSD1306 OLED screen.

int str_x = 5, str_y = 5;

int l_h = 8, l_sp = 5;

if(_type == 'h'){

display.clearDisplay();

switch(_opt){

case 0: display.drawBitmap(str_x, str_y, home_bits, home_w, home_h, SSD1306_WHITE); break;

case 1: display.drawBitmap(str_x, str_y, adjust_bits, adjust_w, adjust_h, SSD1306_WHITE); break;

case 2: display.drawBitmap(str_x, str_y, check_bits, check_w, check_h, SSD1306_WHITE); break;

case 3: display.drawBitmap(str_x, str_y, serial_bits, serial_w, serial_h, SSD1306_WHITE); break;

case 4: display.drawBitmap(str_x, str_y, activate_bits, activate_w, activate_h, SSD1306_WHITE); break;

}

display.setTextSize(1);

(_opt == 1) ? display.setTextColor(SSD1306_BLACK, SSD1306_WHITE) : display.setTextColor(SSD1306_WHITE);

display.setCursor((SCREEN_WIDTH/2)-str_x, str_y);

display.print("1. Adjust");

str_y += 2*l_h;

(_opt == 2) ? display.setTextColor(SSD1306_BLACK, SSD1306_WHITE) : display.setTextColor(SSD1306_WHITE);

display.setCursor((SCREEN_WIDTH/2)-str_x, str_y);

display.print("2. Check");

str_y += 2*l_h;

(_opt == 3) ? display.setTextColor(SSD1306_BLACK, SSD1306_WHITE) : display.setTextColor(SSD1306_WHITE);

display.setCursor((SCREEN_WIDTH/2)-str_x, str_y);

display.print("3. Serial");

str_y += 2*l_h;

(_opt == 4) ? display.setTextColor(SSD1306_BLACK, SSD1306_WHITE) : display.setTextColor(SSD1306_WHITE);

display.setCursor((SCREEN_WIDTH/2)-str_x, str_y);

display.print("4. Activate");

display.display();

delay(500);

}

if(_type == 'a'){

int rect_w = l_h, rect_h = l_h;

display.clearDisplay();

display.drawBitmap(str_x, str_y, adjust_bits, adjust_w, adjust_h, SSD1306_WHITE);

display.setTextSize(1);

display.setTextColor(SSD1306_WHITE);

str_x = (SCREEN_WIDTH/2);

display.fillRect(str_x-rect_w-l_sp, str_y+(l_h/2)-(rect_h/2), rect_w, rect_h, SSD1306_WHITE);

display.setCursor(str_x, str_y);

display.print("Speed:");

str_x += 5*l_sp;

str_y += l_h;

display.setCursor(str_x, str_y);

display.print(current_pot_speed_value);

str_y += l_h;

display.setCursor(str_x, str_y);

display.setTextColor(SSD1306_BLACK, SSD1306_WHITE);

display.print(stepper_config.sprocket_speed);

str_x -= 5*l_sp;

str_y += 2*l_h;

display.setTextColor(SSD1306_WHITE);

display.setCursor(str_x, str_y);

display.fillRect(str_x-rect_w-l_sp, str_y+(l_h/2)-(rect_h/2), rect_w, rect_h, SSD1306_WHITE);

display.print("Pending:");

str_x += 5*l_sp;

str_y += l_h;

display.setCursor(str_x, str_y);

display.print(current_pot_pending_value);

str_y += l_h;

display.setCursor(str_x, str_y);

display.setTextColor(SSD1306_BLACK, SSD1306_WHITE);

display.print(stepper_config.station_pending_time);

display.display();

}

if(_type == 'c'){

int c_r = l_h;

display.clearDisplay();

display.drawBitmap(str_x, str_y, check_bits, check_w, check_h, SSD1306_WHITE);

display.setTextSize(1);

display.setTextColor(SSD1306_WHITE);

str_x = (SCREEN_WIDTH-check_w-(4*c_r))/3;

str_x = check_w + str_x + c_r + l_sp;

str_y += 2*l_h;

(!digitalRead(control_button_A)) ? display.fillCircle(str_x, str_y, c_r, SSD1306_WHITE) : display.drawCircle(str_x, str_y, c_r, SSD1306_WHITE);

display.setCursor(str_x-(l_h/2)-1, l_sp/2);

display.print("CW");

str_x = SCREEN_WIDTH - c_r - (2*l_sp);

(!digitalRead(control_button_C)) ? display.fillCircle(str_x, str_y, c_r, SSD1306_WHITE) : display.drawCircle(str_x, str_y, c_r, SSD1306_WHITE);

display.setCursor(str_x-(2*l_h/3)-2, l_sp/2);

display.print("CCW");

str_x = (2*l_sp/3) + check_w;

str_y += c_r + (3*l_sp);

display.setCursor(str_x, str_y);

display.print("First_H: "); display.print(analogRead(first_hall_effect_sensor));

str_y += 2*l_sp;

display.setCursor(str_x, str_y);

display.print("Second_H: "); display.print(analogRead(second_hall_effect_sensor));

display.display();

}

if(_type == 's'){

display.clearDisplay();

display.drawBitmap(str_x, str_y, serial_bits, serial_w, serial_h, SSD1306_WHITE);

display.setTextSize(1);

display.setTextColor(SSD1306_WHITE);

str_x += serial_w + 3*l_sp;

display.setCursor(str_x, str_y);

display.print("Serial");

str_y += l_h;

display.setCursor(str_x, str_y);

display.print("Initiated!");

str_y += 3*l_h;

display.setCursor(str_x, str_y);

display.print("Response: "); display.print(rasp_pi_5_res);

display.display();

}

if(_type == 'r'){

display.clearDisplay();

str_x = (SCREEN_WIDTH-activate_w)/2;

str_y = (SCREEN_HEIGHT-activate_h)/2;

display.drawBitmap(str_x, str_y, activate_bits, activate_w, activate_h, SSD1306_WHITE);

display.display();

}

}⭐In the rasp_pi_5_response function, wait until Raspberry Pi 5 successfully sends a response to the transmitted data packet via serial communication.

⭐Once the retrieved data packet is processed, halt the loop checking for the response data packets.

⭐If Raspberry Pi 5 does not send a response in the given timeframe (station pending time), terminate the loop as well.

⭐Finally, return the fetched response.

char rasp_pi_5_response(){

char rasp_pi_response = 'n';

int port_wait = 0;

// Wait until Raspberry Pi 5 successfully sends a response to the transmitted data packet via serial communication.

while(rasp_pi_5_ongoing_transmission){

port_wait++;

while(rasp_pi_5.available() > 0){

rasp_pi_response = rasp_pi_5.read();

}

delay(500);

// Halt the loop once Raspberry Pi 5 returns a data packet (response) or does not respond in the given timeframe (station pending time).

if(rasp_pi_response != 'n' || port_wait > stepper_config.station_pending_time){

rasp_pi_5_ongoing_transmission = false;

}

}

// Then, return the retrieved response.

delay(500);

return rasp_pi_response;

}⭐ In the send_data_packet_to_rasp_pi_5 function, transfer the passed data packet to Raspberry Pi 5 via serial communication.

⭐ Suspend code flow until acquiring a response from Raspberry Pi 5.

void send_data_packet_to_rasp_pi_5(String _data){

rasp_pi_5_res = 'o';

// Send the passed data packet to Raspberry Pi 5 via serial communication.

rasp_pi_5.println(_data);

// Suspend code flow until getting a response from Raspberry Pi 5.

rasp_pi_5_ongoing_transmission = true; rasp_pi_5_res = rasp_pi_5_response();

delay(1000);

}⭐ In the conveyor_move function, based on the passed direction and step number, rotate two stepper motors driving the sprockets simultaneously to move the conveyor chain precisely.

- Clockwise [CW]: rotate stepper motors in the same direction (right) at the same velocity.

- Counterclockwise [CCW]: rotate stepper motors in the same direction (left) at the same velocity.

void conveyor_move(int step_number, int acc, String _dir){

/*

Move the sprocket-driven circular conveyor stations by controlling the rotation of the associated stepper motors.

Clockwise [CW]: rotate stepper motors in the same direction (right) at the same velocity.

Counterclockwise [CCW]: rotate stepper motors in the same direction (left) at the same velocity.

*/

if(_dir == "CW"){

digitalWrite(stepper_config._pins[stepper_config.sprocket_1][0], HIGH);

digitalWrite(stepper_config._pins[stepper_config.sprocket_2][0], HIGH);

}

if(_dir == "CCW"){

digitalWrite(stepper_config._pins[stepper_config.sprocket_1][0], LOW);

digitalWrite(stepper_config._pins[stepper_config.sprocket_2][0], LOW);

}

for(int i = 0; i < step_number; i++){