Story

Sometimes technologies find new meaning by accident.

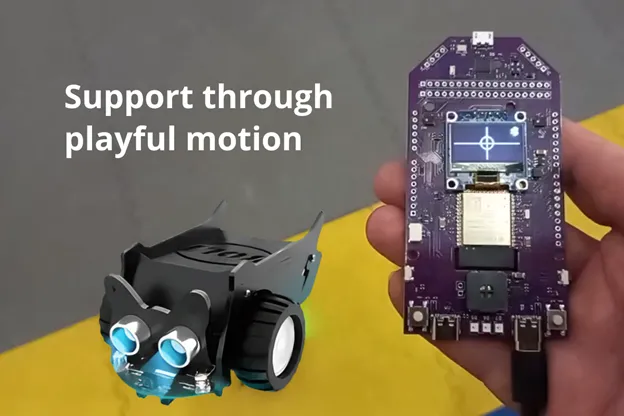

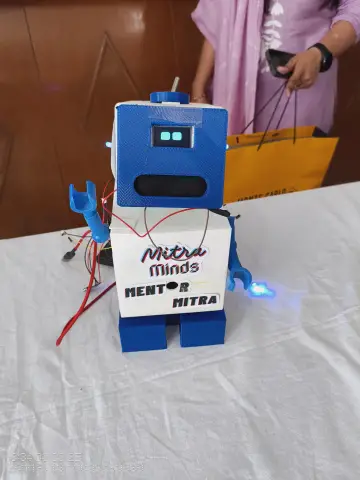

VoxControl — our ESP32-S3 module with offline voice recognition and IMU — was built to control small robots like the Elecrow CrowBot. It listens to short commands, senses tilt, and sends motion signals instantly, without cloud or delay.

Originally created for experiments, this simple setup revealed another side — a gentle, playful way to practice speech, attention, and small coordinated movements.

A small, local project that might matter to someone close.

Note: This is a DIY project. It’s meant to inspire and support — not to diagnose or treat.

Rehabilitation-Style Scenarios

And just like that, simple voice and motion control turned into a small set of playful exercises.

Not therapy, but gentle activities that echo techniques often used when people rebuild speech, attention, or small coordinated movements after difficult periods — stroke recovery, long immobility, neurological fatigue, or age-related decline.

Sometimes tiny actions with clear feedback can make practice feel lighter.

1. Simple Reaction Game

Say “forward” — the robot moves. Say “stop” — it halts.

Trains articulation, attention, and control, all through play.

2. Voice + Motion Coordination

Say “left” and tilt slightly left — CrowBot turns.

Helps rebuild the link between voice and motion, vital after stroke recovery.

3. Guided Path Challenge

CrowBot follows a route only when voice and motion commands align.

A playful way to train planning, timing, and motor control.

These are not clinical protocols — just open ideas for creative exploration.

Maybe one of them will grow into something that truly helps someone nearby.

Why it’s Awesome

- Fully offline: Voice recognition and motion logic run entirely on the ESP32-S3.

- Voice + motion synergy: Speak and tilt.

- Playful motivation: Turns repetition into a game with instant feedback.

- Accessible and open: Built from hobbyist parts.

- Human purpose: A way for makers to build something that could help others.

How it Works

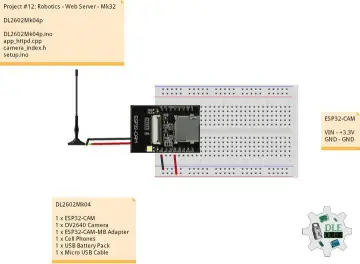

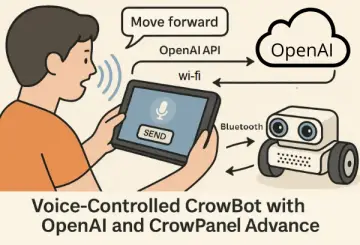

1. VoxControl (ESP32-S3) recognizes short voice commands locally.

2. The onboard IMU sensor detects tilt and small hand movements.

3. Both voice commands and IMU data are sent over Bluetooth to the CrowBot.

4. The CrowBot firmware interprets these inputs and turns them into movement — or any other actions you choose to program.

Instant reaction, no cloud, no lag.

Under the Hood

1. Ready-to-use firmware — works straight out of the box

VoxControl comes with preloaded firmware that already supports:

-

- offline recognition of several default commands

- adding and updating custom voice commands through the companion configuration app

- IMU-based tilt sensing (forward / back / left / right)

- Bluetooth transmission of recognized commands + tilt data

- example CrowBot firmware with built-in handlers for both voice and tilt input

The whole workflow works immediately — you can use it as-is or adapt the robot’s logic however you like.

2. Add your own voice commands (no training required)

The examples below are just illustrations — any text phrase can become a command, as long as you write it in plain letters:

-

- GO SLOWLY

- TURN LIGHTS

- MOVE BACKWARD

- START SPINNING

- STOP MOTION

To add new commands:

Simply type your phrases in the companion configuration app (included with VoxControl) and send them to the board.

Once transferred, VoxControl begins recognizing them instantly — with no model training, no cloud, and no extra tools.

CrowBot will receive these new commands the same way as the default ones, and you can extend its handlers to define any behavior you want.

3. IMU as a second control channel

The onboard IMU continuously sends tilt data — forward/back and left/right — while the board is in tilt-control mode.

These orientation values are transmitted alongside voice commands, giving CrowBot two parallel input streams to work with.

The example CrowBot firmware already includes a tilt-processing block, which you can easily adapt:

-

- adjust sensitivity and thresholds

- map tilt to steering or speed

- combine tilt + voice for compound actions

- define your own response logic

Tilt + voice becomes a flexible input system you can shape to any scenario.

Quick Start

What you need

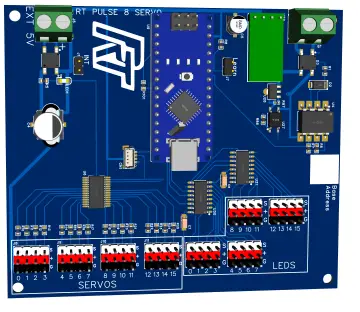

- VoxControl (ESP32-S3) — with built-in voice + IMU + Bluetooth

- CrowBot — the robot base that will interpret your commands

That’s all.

1. Flash the CrowBot firmware

Download the firmware from the link (you’ll provide it in the project) and flash it to the CrowBot controller.

This firmware already includes:

-

- Bluetooth receiver

- handlers for voice commands

- handlers for tilt-control mode

2. Power on VoxControl

It boots with ready-to-use firmware:

-

- listens for voice commands

- streams tilt data

- sends everything to CrowBot over Bluetooth

No setup required.

3. Start experimenting

Hold VoxControl in your hand, say a command, tilt slightly — CrowBot reacts.

Try the built-in commands or add your own phrases through the companion app.

In under a minute, you have a fully offline voice-and-motion control system ready for play, demos, or rehabilitation-style exercises.

What’s Next

Once you try the basic voice-and-tilt control, the platform naturally invites extension.

Everything runs locally, so you can create new modes without changing the hardware.

Add richer command sets

Use the companion app to load new phrases — longer, more specific, or more playful.

CrowBot will receive them like any other command, and you can map each phrase to new actions or behaviors.

Create your own movement logic

Modify the CrowBot firmware to introduce:

-

- gradual speed control

- gesture-based steering

- special sequences (circle, zig-zag, patterns)

- LED or buzzer feedback

The robot becomes a canvas for your own interaction ideas.

Build your own scenarios

You can design:

-

- guided-path challenges

- timing or rhythm-based tasks

- mixed voice + motion coordination exercises

It’s all just input → logic → action.

Community & Feedback

If you try this project or adapt it in your own way, feel free to share what worked for you.

Short notes, small videos, or simple comments can help others understand how you approached the idea. Even a 10–20 second video of your setup in motion can help others understand what this project can do in real life.

If you extend the command set or experiment with new movement logic, you’re welcome to open a suggestion or pull request in the GitHub repo — it may help someone exploring a similar path.

And if this setup happens to support someone close to you, even in a small way, telling that story might inspire others to try their own version.

⚠️ Disclaimer

This project uses hobbyist hardware and is not a medical device.

It does not replace professional therapy or medical supervision.

Resources & Links

- Firmware & Source Code: GitHub repository

- VoxControl Module: product page on Elecrow

- CrowBot Robot Base: product page on Elecrow